Byte-swap based hash function

% Remco Bloemen % 2014-02-27, last updated 2014-03-04

This weekend I played with the SMHasher benchmark suite for non-cryptographic hash functions. And I couldn’t resist designing my own hash function, with some unique properties.

The general design of MurMurHash and some other popular hash functions is something along these lines:

hash(uint64_t* data, int length, uint64_t seed)

{

uint64_t hash0 = seed;

uint64_t hash1 = length;

while(/* more data */)

quick_mix(hash0, hash1, *data++, *data++);

perfect_mix(hash0, hash1);

return hash0, hash1;

}

This example is simplified in that it assumes the data is a multiple of 128 bits. It is easy to accommodate for different size by padding with zeros.

The quick_mix function mixes a 128 bit input into the 128 bit state.

It should mix enough of the input into the state to prevent simple

collisions. But, if the input data is large the quick_mix function

becomes the bottleneck. So we’d like to make it as simple as possible,

without getting collisions.

The perfect_mix takes the output of the inner loop and does some final

processing so that the hash function satisfies some strong statistical

properties such as the strict avalanche criterion and the bit

independence criterion.

The hash state is initialized with a seed and the length of the data.

The seed value allows independent hash values to be created using the

same algorithm. This is necessary for Bloom filters or to protect hash

tables against certain denial of service

attacks. The some implementations of quick_mix have a weakness where

an input of all zero’s does not change the state, this would mean all

inputs consisting of only zeros would map to the same hash value,

despite the fact that they could have different lengths. To compensate

for this, the length is also used as a sort of seed.

Let’s look at MurMurHash’s implementation of quick_mix (version 3,

x64, 128 bit hash):

const uint64_t c1 = 0x87c37b91114253d5ULL;

const uint64_t c2 = 0x4cf5ad432745937fULL;

void quick_mix(uint64_t& hash0, uint64_t& hash1, uint64_t input0, uint64_t input1)

{

input0 *= c1;

input0 = ROTL64(input0,31);

input0 *= c2;

hash0 ^= input0;

hash0 = ROTL64(hash0,27);

hash0 += hash1;

hash0 = hash0*5+0x52dce729;

input1 *= c2;

input1 = ROTL64(input1,33);

input1 *= c1;

hash1 ^= input1;

hash1 = ROTL64(hash1,31);

hash1 += hash0;

hash1 = hash1*5+0x38495ab5;

}

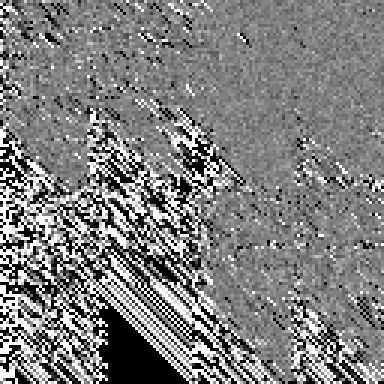

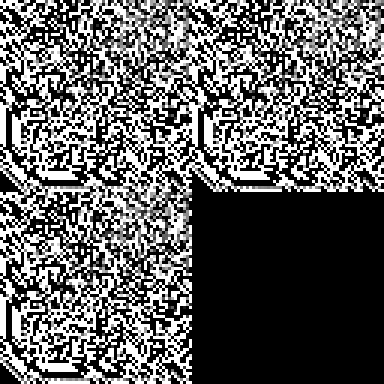

It uses multiplication, rotation, and a linear congruential generator to mix bits within an integer and it uses addition and xor to mix two integers. Let’s look at the avalanche plot :

(See Bret Mulvey’s page for what this means)

Now that is a nice texture!

The 128 columns represent the output state, the 128 rows represent the input bits. If a pixels is black, it means that there is a small chance (< 50%) that the corresponding bit of the output is changed by the corresponding bit of the input. If the pixels is white, it means there is a large chance (> 50%) that the bit is changed. Under the strict avalanche criterion, the image would be solid gray, representing all ≈50% chances. Clearly we’re not there yet.

The 4x4 block structure is because of the almost 32 bit rotates. The

diagonal stripes are caused by the multiplication, which can only mix an

input bit into higher bits. One can also see that the first 32 bits of

input affect all the bits of the state, but the last bits of the input

only affect the lower 32 bits of hash1.

Let’s run the quick_mix function once more on the same state, with all

zero inputs. From the function one can see that zero inputs mean that

the first and third line do nothing. We are only looking at the state

mixing functions in the second and fourth line. The state after a second

iteration looks like:

It looks much better, there is a large number of gray pixels and almost all the bits are affected by the input bits in some way. Let’s do one more iteration.

Even better! The state is almost entirely avalanches and even the most difficult bit, bit 128, has some gray pixels in its row. One more:

Almost there. Just one more round…

…and we’re there! After five iterations all the bits have become completely dependent on the input bit. The strict avalanche condition appears to be satisfied.

Designing a byte-swap based mixing function

Multiplication by a large prime modulo 64 is a great way to design a hash function. The operation is injective on the 64 bit numbers, meaning it loses no information and can thus be inverted. It is also fast, modern processors can do several such operations in a single clock cycle. Finally, the inverse is non-trivial to compute, just what we want for a hash function.

Multiplication would be perfect if it was not for two weaknesses. First, it maps zeros to zeros. It is not hard to work around this, you can just seed the hash with the length of the data or offset zeros with some constant. The second weakness is more profound. Multiplication only escalates bits to higher bits! It causes the avalanche diagrams to become triangles instead of squares, just like what can be seen in the first image.

The multiplication operation needs an operation to complement it, something that will mix the higher bits into the lower bits. In all the hash functions I know, this is done by bitshifting or rotating the higher bits into lower positions. This process of multiplication and rotation can then repeated until the result is sufficiently mixed.

The problem is how much do we rotate? If we rotate by 32 bits, the highest bits get mixed into bit 32, which is still quite high. If we rotate by 60 bits, the highest bits are nicely mixed into the lowest bits, but only four of them get mixed.

What we would ideally like to do is mix the best-mixed bits into the least mixed bits, that is mix bit 64 into bit 1, mix bit 63 into bit 2, etcetera. This entails reversing the order of the bits. Despite some cool algorithms in "Hacker’s Delight", this is still a slow operation.

And then it struck me: there is a single instruction operation that does

almost the same as reversing all the bits: it reverses all the bytes!

It’s not very well known unless you’re writing low level code that mixes

little endian and big endian representations. But still, it is there. To

access it you can use the GCC builtin function __builtin_bswap64, or

this wrapper:

inline void byte_swap(uint64_t& value)

{

value = __builtin_bswap64(value);

}

Now let’s build a hash function using byte swap! Start simple: multiply and swap:

const uint64_t k64 = 0x436174bab1d5558dULL;

inline uint64_t quick_mix(uint64_t& value)

{

value *= k64;

byte_swap(value);

value *= k64;

}

The constant k64 is a large prime that was found by simulated

annealing. (I took this idea, and everything else, besides the byte-swap

from the author of SMHasher and MurMurHash.) You start with a random

value. Measure its quality. Flip some bits. Measure it again. Keep the

change if it’s an improvement. Repeat until satisfied.

Pseudo-Hadamard transform

The quick_mix function is good for mixing a single integer, but we

need to mix several integers. Two input values and two state values.

A good way to mix integers two integers such is the Pseudo-Hadamard transform:

inline void hadamard(uint64_t& a, uint64_t& b)

{

a += b;

b += a;

}

The net result is that a' = a + b and b' = a + 2b, both a' and

b' depend on a and b. The operation effectively diffuses a and

b into each other. It is also invertible, so no information is lost be

using it.

It can be extended to more variables by recursions:

inline void hadamard(uint64_t& a, uint64_t& b, uint64_t& c, uint64_t& d)

{

hadamard(a, b);

hadamard(c, d);

hadamard(a, c);

hadamard(b, d);

}

This is a good function to mix four integers. It uses a total of eight additions, two of which can be pipelined at a time.

For my hash function I can get away with a variation that is faster, but less good. The idea is to use addition modulo 2 instead of modulo 2⁶⁴. That is, to use xor instead of add:

inline void quick_hadamard(uint64_t& a, uint64_t& b)

{

a ^= b;

b ^= a;

}

If you meditate on this for a moment you will see that it amounts to:

inline void quick_hadamard(uint64_t& a, uint64_t& b)

{

b ^= a;

swap(a, b);

}

So the output a' will depend on both a and b, but b' will only

depend on a. Still, it will turn out to be good enough. The reason it

is faster is that the swap operation can often be folded in other

operations.

The four integer Hadamard transform simplifies to:

inline void quick_hadamard(uint64_t& a, uint64_t& b, uint64_t& c, uint64_t& d)

{

b ^= a; d ^= c;

d ^= b; c ^= a;

swap(a, d); swap(b, c);

}

The net effect is:

a' = a ^ b ^ c ^ d

b' = a ^ c

c' = a ^ b

d' = a

A 256 bit to 128 bit mixing function

From the quick_mix and quick_hadamard above I constructed the

following hash function:

void quick_mix(uint64_t& hash0, uint64_t& hash1, uint64_t input0, uint64_t input1)

{

quick_hadamard(hash0, hash1, input0, input1);

quick_mix(hash0);

quick_mix(hash1);

}

First a xor Psuedo-Hadamard tranform is used to mix the input into the

state, then the state is scrambled using quick_mix and an additional

multiply.

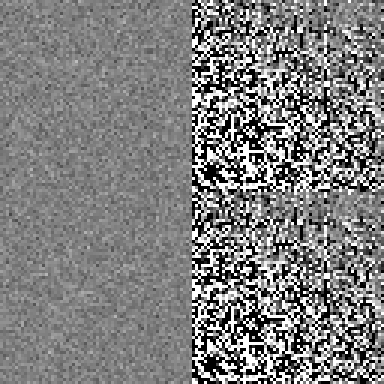

Let’s look at the avalanche diagrams:

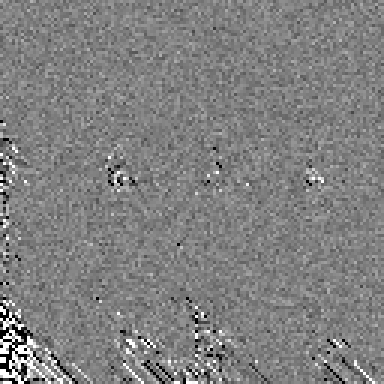

After the initial mixing, the result is already very nice. There is no

mixing of input1 into hash1, this is caused by the simplicity of my

Hadamard tranform. If you look closely you can see blocks eight by eight

pixels, this is due to the byte_swap working in blocks of eight bits.

You can even see small triangles caused by the multiply in the lower

left corner of the three large blocks. Every input bit affects at least

56 state bits.

If you look more closely you’ll see that the three mixed blocks have

identical patterns. This means there is a lot of correlation between the

bits. The MurMurHash function does not have this problem because it uses

slightly different functions on all the variables. This could be done

here by using different multiplication constants for hash0 and

hash1. But for now, let’s iterate once more:

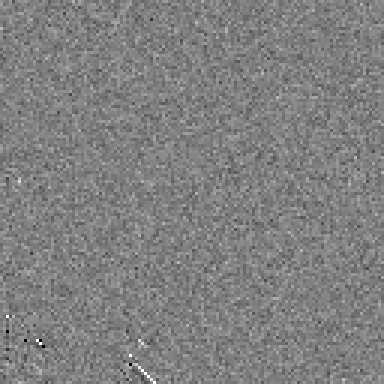

Wow! It looks as if hash0 is already perfectly mixed! It appears to

pass the strict avalanche criterion. At this point it would be very

interesting to test the bit independence criterion as well, maybe some

other time. Let’s iterate once more.

Perfect, in only three iterations! Clearly there is some merit in using

byte_swap. Time to benchmark this algorithm!

SMHasher

Time to benchmark this algorithm!

The algorithm was implemented as above. The final two multiplications

where moved to the start of the loop, so they can happen in tandem with

the loads and adds. This does not change the state transformation

between two quick_hadamard's in any way, so it should not change the

analysis above.

On my machine it passes all tests and performs as:

- 289 bytes/cycle - 9409.16 MiB/sec @ 3 ghz 1-byte keys - 26.46 cycles/hash

Compare this with MurMurHash 3:

- 755 bytes/cycle - 7883.52 MiB/sec @ 3 ghz 1-byte keys - 23.52 cycles/hash

Or with CityHashCrc which uses special CRC instructions:

- 056 bytes/cycle - 20188.66 MiB/sec @ 3 ghz 1-byte keys - 47.37 cycles/hash

All benchmarks where done on a Core i7 processor, your millage may vary.

Conclusion

We’ve constructed a promising non-cryptographic hash function using only multiply, byte swap and xor. As far as I know, this is the only hash function that does not use rotates or shifts, which makes it rather unique!

Even though it passes all the tests in SMHasher, I am concerned that there may be a lot of correlation in the bits which goes undetected. It would be good to build in some defenses and measure the quality of the bit independence criterion.

Source code

mulswap-avalanche.cpp: The program used to compute avalanche diagrams. It requires libpng.

smasher-mulswaphash.patch: Patch to include MulSwapHash in SMHasher.